i think that preferred contact is by matrix

i think that preferred contact is by matrix

he’s either out of fucks, or knows exactly what he’s doing

drones can be also shot down and operators radiolocated very quickly

no, because they have separate comms using completely different bands. esp when you’re talking about military

if you switch to different band, probably nonstandard and unlicensed, then there must be someone else to listen

real. every prediction i got from computational chemists was wrong

the waste heat comes from cryogenics system that keeps all of this helium at below 3K. turns out you need to spend a lot of energy to cool down things to temperatures this low

you can’t turn a gas into liquid by compression alone if temperature is above critical point, you also need to cool it down. separation is done by fractional distillation, but the reason it’s done is mostly about oxygen (medical and steelmaking among some other uses). for nitrogen it’s somewhere about -150C. first air is stripped of water and carbon dioxide, then it’s turned into a liquid, then it’s separated into oxygen, nitrogen and argon, and some large specialized plants also separate xenon, krypton and neon

if you don’t actually care for it being a liquid, there’s another method called pressure swing adsorption that separates gases based on how tightly do they bind to porous surfaces under pressure. this is how medical oxygen concentrators work

making liquid nitrogen is pretty efficient these days, as in not much more energy is used than is actually needed

yeah nah, you are a tool and talk like one, there’s zero risk you’re ever getting close to admission

how many alts have you made today

shooting down bosses stupid ideas is #1 productivity tip for professionals (like most people on lemmy are)

if everything else fails add matrix account to your profile (to have another communication option) and reach out to admin of your instance

We’re British

important point lol, 90s sucked in eastern block https://en.wikipedia.org/wiki/Russian_cross_(demography)

also a couple of east asian countries like at minimum japan, south korea, taiwan were proper tinpot dictatorships until 90s

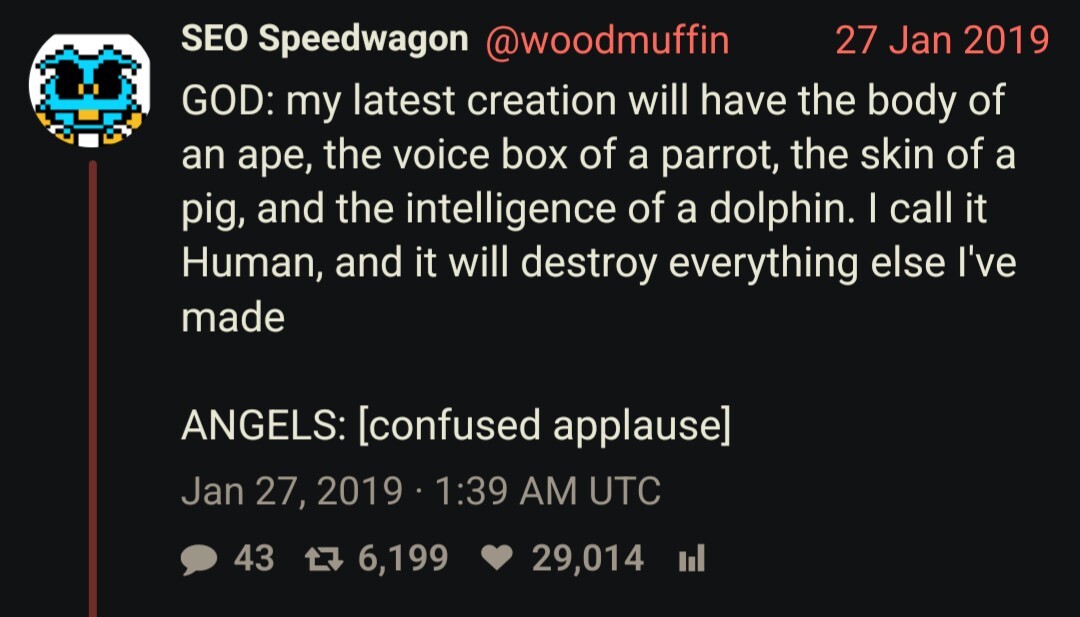

this meme is about piss

as i understand, this is what bellingcat uses as a major source of data when reporting on russian activities

“It is one of the paradoxes of modern Russia: on the one hand, these services are illegal and rely on leaked data, yet on the other, they are far more convenient for day-to-day police work than the multitude of official departmental databases,”

gaben on piracy: “We think there is a fundamental misconception about piracy. Piracy is almost always a service problem and not a pricing problem,”

There is a thermal energy storage included as s major part. This works because compressing CO2 to 55atm adiabatically heats it up to some 450-ish C, so that heat is pretty high grade, and only the final stage cools it down with heat exchanger open to air. In discharging direction, some heat is taken from outside air to evaporate part of CO2 and heat stored is used up

compressors, turbines (like steam turbines), piping, some of which heat-resistant (500C), container for liquid carbon dioxide, lots of plastic for the bubble, something for thermal storage, dry and clean carbon dioxide, these aren’t unusual or restricted resources, don’t depend on critical raw materials or anything like that

Compressed air without heat recovery is more like 30%, so this is huge

Carbon dioxide can be liquefied relatively easily which is what i guess makes this efficient

i can only watch in disbelief

this multicolored pattern looks like this because thickness of layer of whatever is comparable to light wavelength, mechanism is the same as in oil layers on water being colorful. it didn’t spread from magnet, the layer is thinnest near magnet and becomes thicker near edges, which suggest it might be just dirt/oils that accumulated on it by contact. try wiping it with alcohol or acetone or what have you