I tried to leave a comment, but it doesn’t seem to be showing up there.

I’ll just leave it here:

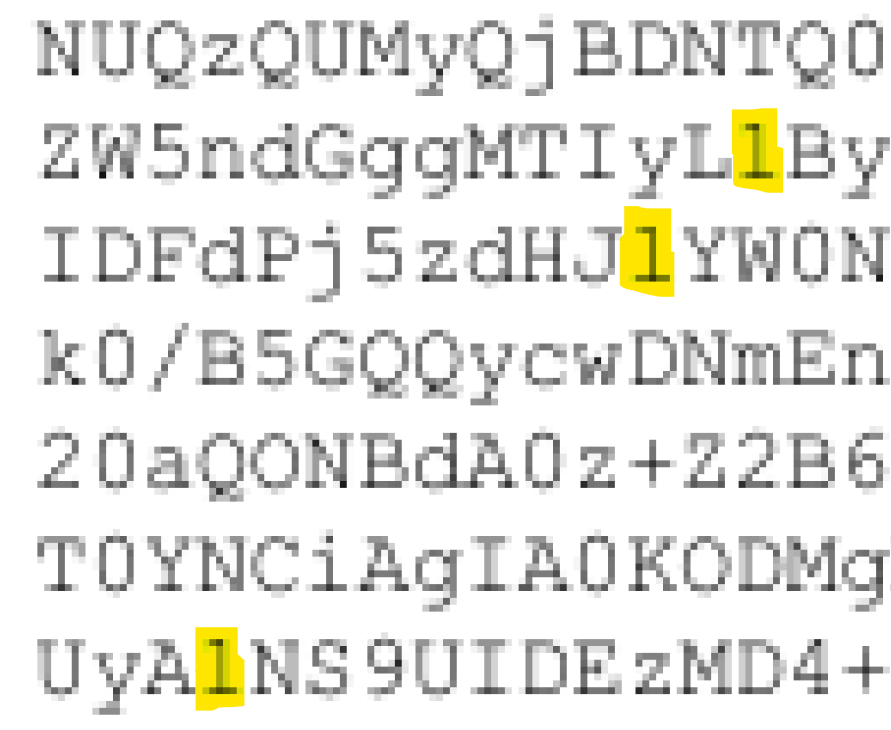

too tired to look into this, one suggestion though - since the hangup seems to be comparing an L and a 1, maybe you need to get into per-pixel measurements. This might be necessary if the effectiveness of ML or OCR models isn’t at least 99.5% for a document containing thousands of ambiguous L’s. Any inaccuracies from an ML or OCR model will leave you guessing 2^N candidates which becomes infeasible quickly. Maybe reverse engineering the font rendering by creating an exact replica of the source image? I trust some talented hacker will nail this in no time.

i also support the idea to check for pdf errors using a stream decoder.

How big is N though?

Asking the real questions

We just need those 76 page base64 printouts stuffed into captcha so we can crowdsource cracking them

…it’s safe to say that Pam Bondi’s DoJ did not put its best and brightest on this (admittedly gargantuan) undertaking

Actually they did. It’s just that their best and brightest are fairly dim.

Well it’s all the leftovers at this point. When the priority is loyalty, performance suffers.

This is really great, dont tell this to anyone!

They are still releasing more parts of the Epstein files!

Take the advice of Napoleon: Never interrupt the enemy while they are making a mistake!

yeah, I’m always a bit annoyed when people laught at the incompetence.

Let them.

Heck, some of it might even be intentional. Don’t take away tools for leakers

Has anyone checked if it’s just black text on a black background. That would be in line with the competence level of Donnie’s administration.

I took a brief look at one and it seems they may have learnt their lesson from the first time around, unfortunately.

Some of the reactions are some in an effective way, and I assume this example is one of them. The problem being evidently they didn’t think any what might be in big base64 blobs in the PDF, and I guess some of these folks somehow had their email encoded as PDF, which seems bonkers…

Some email programs did that, especially when there was special formatting involved. I seem to recall Thunderbird doing it in the past, as well as outlook.

Amazing what a bit of knowledge, intelligence and competency can achieve.

Inversely, it’s also amazing what a lack thereof cannot achieve, for instance, redacting publicized documents.

I am not intelligent enough to understand any of it but that was a fun read.

TIL the origin of Courier.

Long story short:

- Some of the emails in the file dump had attachments.

- The way attachments work in emails is that they’re converted to encoded text.

- That encoded text was included - badly - in the file dump.

- So it’s theoretically possible to convert them back to the original files, but it will take work to get the text back. Every character has to be exactly correct.

Source: I’m a software developer and I’m currently trying to recover one of these attachments.

Curious here, this is base 64? And what’s behind it is more often than not an image or text? And you need to do ocr to get the characters?

Maybe for the text it could use a dictionary to rubber stamp whether that zero is actually a letter oh, etc etc?

I’m curious to know what the challenge is and what your approach is.

Yes, it’s base64. And what’s behind it could be anything that can be attached to an email.

In this case, it’s a PDF. If the base64 text can be extracted accurately, then the PDF that was attached to the email can be recreated.

The challenge is basically twofold:

- There’s a lot of text, and it needs to be extracted perfectly. Even one character being wrong corrupts it and makes it impossible to decode.

- As the article points out, there are lots of visual problems with the encoded text, including the shitty font it’s displayed with, which makes automating the extraction damn near impossible. OCR is very good these days, but this is kind of a perfect example of text that it has trouble with.

As for my approach, I’m basically just slowly and painstakingly running several OCR tools on small bits at a time, merging the resulting outputs, and doing my best to correct mistakes manually.

Ah yes pdf is a clusterfuck where anything is valid I think, so minimal redundancy.

Text and image formats are way more lenient and are full of redundancies.

I’m a software developer and I’m currently trying to recover one of these attachments.

🫡

Godspeed friend

Are you having as much trouble with OCR as the article author? I would have thought OCR was a solved problem in 2026 even with poor font selection.

I’m not having trouble with it as such, it’s just a slow and painstaking process. The source is crappy enough that an enormous number of characters need to be checked manually, and it’s ridiculously time-consuming.

I wonder if they gave considered crowdsourcing this, having many people type in small chunks of the data by hand, doing their own character recognition? Get enough people in and enough overlap and the process would have some built-in error correction.

I mean the problem is that even with human eyes it’s still really hard to tell l and 1 in that font.

Not an expert at all but I’m genuinely curious how long it would take to check all possibilities for each I or 1? Is that the full length of the hash or whatever? So in this example image we have 2^8 =256 different possibilities to check? Seems like that would be easy enough for a computer.

Edit: actually read the article. It’s much more complicated than this. This isn’t really the only issue and the base64 in the example was 76 pages long.

There’s an iOS game about the history of fonts you might enjoy. Struggling to find it at the moment, but you play a colon navigating through time, solving various puzzles.

Fun fact: this guy uses fish shell.

Source? I’ve seen the bash reference manual in the files

The article author. And they state it explicitly in the footnotes.

Interesting in few weeks we might end up with some additional unredacted documents

I need an ELI5 version of this. (Note: this comment is a critique of me, not the author or the content of the article.)

Edit: if “nerdsnipe” isn’t in the dictionary, it totally should be.

Some of the Epstein emails were released as scanned PDFs of raw email format (See MIME)

MIME formatted emails are ASCII based. To include an attachments, which can be binary, the MIME format specifies it must be encoded using base64. Base64 can always take binary input and return an ASCII output. This is trivial to reverse if you have the ASCII output.

However, the font choice is inconvenient because l and 1 look the same.

I’m a bit confused by the article is only discussing extracting PDFs while in actuality you can reverse any attachment including images.

I am also no expert, so a smarter person will now correct me on anything I got wrong.

Its correct

Why the pdfs contain “wrong letters” though, i havent a clue

I think this is the origin: https://xkcd.com/356/