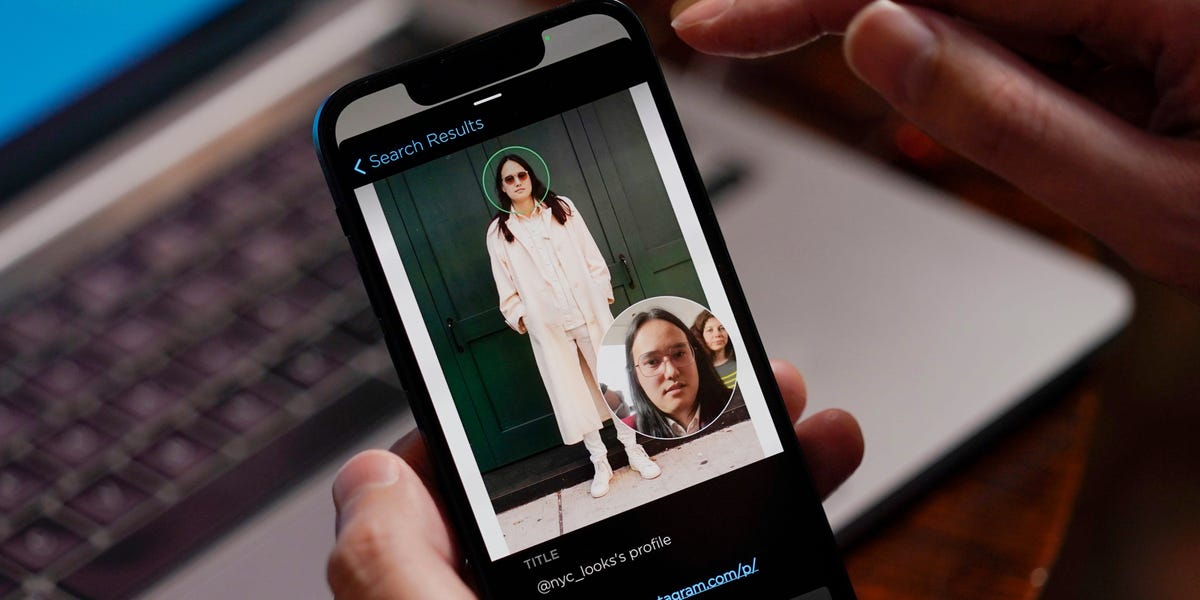

Clearview AI built a massive facial recognition database by scraping 30 billion photos from Facebook and other social media platforms without users’ permission, which law enforcement has accessed nearly a million times since 2017[1].

The company markets its technology to law enforcement as a tool “to bring justice to victims,” with clients including the FBI and Department of Homeland Security. However, privacy advocates argue it creates a “perpetual police line-up” that includes innocent people who could face wrongful arrests from misidentification[1:1].

Major social media companies like Facebook sent cease-and-desist letters to Clearview AI in 2020 for violating user privacy. Meta claims it has since invested in technology to combat unauthorized scraping[1:2].

While Clearview AI recently won an appeal against a £7.5m fine from the UK’s privacy watchdog, this was solely because the company only provides services to law enforcement outside the UK/EU. The ruling did not grant broad permission for data scraping activities[2].

The risks extend beyond law enforcement use - once photos are scraped, individuals lose control over their biometric data permanently. Critics warn this could enable:

- Retroactive prosecution if laws change

- Creation of unauthorized AI training datasets

- Identity theft and digital abuse

- Commercial facial recognition systems without consent[1:3]

Sources:

The worst part is you can’t opt out as a full real human. You can only opt out one image at a time. So you’ll never know what they have to complete the job.

You also can’t opt out of other people sharing your shit.

I assure you, many people can and do opt of being full real humans and it is often because of the monsters who work at Clearview and their customers who make this planet and reality itself a really unacceptable place to be trapped in.

Dazzle camoflauge makeup, lets all be juggalos

I’m 4 Faygos ahead of you