Is it just me or the clocks frequently break or change appearance without the page being refreshed?

Edit: nevermind, I skipped past the sentence explaining that every minute, the site prompts LLMs for a new solution. This is hilariously sad how LLMs aren’t able to be consistent from one prompt to another.

It’s the expected result if your big ol’ artificial intelligence wannabe is ultimately just a stochastic word combinator.

if every single token is, at the end, chosen by random dice roll (and they are) then this is exactly what you’d expect.

that’s a massive oversimplification

not really. If the system outputs a probability distribution, then by definition, you’re picking somewhat randomly. So not really a simplification

Apologies for the late reply, but it turns out I can’t let that sit. Sorry for the rant, but I work in RL and saying “it’s just dice rolls” is insulting to my entire line of work. :(

A probability distribution is not the same as random dice roll. Dice rolls are uniformly and independently random, whereas the probability distributions for LLMs are conditional on the context and the model’s learned parameters. Additionally, all modern LLMs use top K and p sampling–which filters the probability distribution to only high confidence words–so the probability of it choosing to say random garbage is exactly zero.

The issues with LLMs have nothing to do with their sampling from random distributions. That’s just a minor part of their training, and some LLMs don’t even do random sampling since they use tree search. The issues with LLMs are the result of people trying to teach it intelligence using behavior cloning on a corpus of human words and images. Words can’t encode wisdom, only knowledge. Wisdom can only be gained through lived experience.

How well do you think you would perform if you were born into a cave, forced to read a thousand dictionaries in order with no context, and then your only interaction with the outside world was a single question from a single human, and then you died? If you ask me, the LLMs are doing suprisingly well given their “lived experiences”.

“conditional on the context and the model’s learned parameters.” you seem to be under the wrong impression that “random dice roll” == “random dice roll from a uniform distribution”. I didn’t say that. If it outputs a probability distribution, which it does, then you sample it randomly according to that distribution, not a uniform one.

As for your last paragraph: I wasn’t, I didn’t do that, and if that’s all the system can do then people should stop claiming it is even remotely intelligent. Whatever the excuses, the systems aren’t (and won’t be getting) there. If you’re trying to get me to empathize with a couple of matrices, then you’re not going to succeed.

I don’t care if you get offended because someone else doesn’t like your line of work. I think what you do is actively harmful to humanity. I also dislike weapons manufacturers, how they feel about it is irrelevant. You’re no different

you seem to be under the wrong impression that “random dice roll” == “random dice roll from a uniform distribution”.

Almost all dice are uniformly random. Unless you’re using weighted dice? Which, seeing how defensive you get when wrong, might actually make sense 🤔

Knowledge isn’t a competition. Nobody cares about any of this. We will all die and be forgotten. You’re on an internet forum with a bunch of people who have no idea who you are and who could care less about your knowledge of statistics.

Respectfully blocked. I have better things to do with my time.

deleted by creator

This is hilariously sad how LLMs aren’t able to be consistent from one prompt to another.

Typically that’s configurable. Like for a chatbot, you’d want it to give the same/similar results for a given question, where with a character creator, you might want the results to vary so you can re-run until you get something you like.

Of course that wouldn’t be as funny here.

You can click on the button in the top right corner (with a question mark) to have explanations. The clocks are refreshed every minute

When I tried it Kimi K2 was surprisingly consistent and not even as bad as the others. Occasionally the numbers or hands (I couldn’t really tell which) were possitioned a bit off, for example the seconds hand will appear to be horizontal but the 9 or 3 will be slightly below or slightly above the hand. But whoever can center a div may throw the first stone, and it’s not going to be me for sure

This is my favorite

gaslight clock

So the prompt is intentionally breaking things…?

Edit: I guess this was somehow the chatty output doing who know wtf

The last one, Kimi K2, has been consistently good as long as I’ve been looking at it. That’s pretty impressive.

The rest are hilarious!

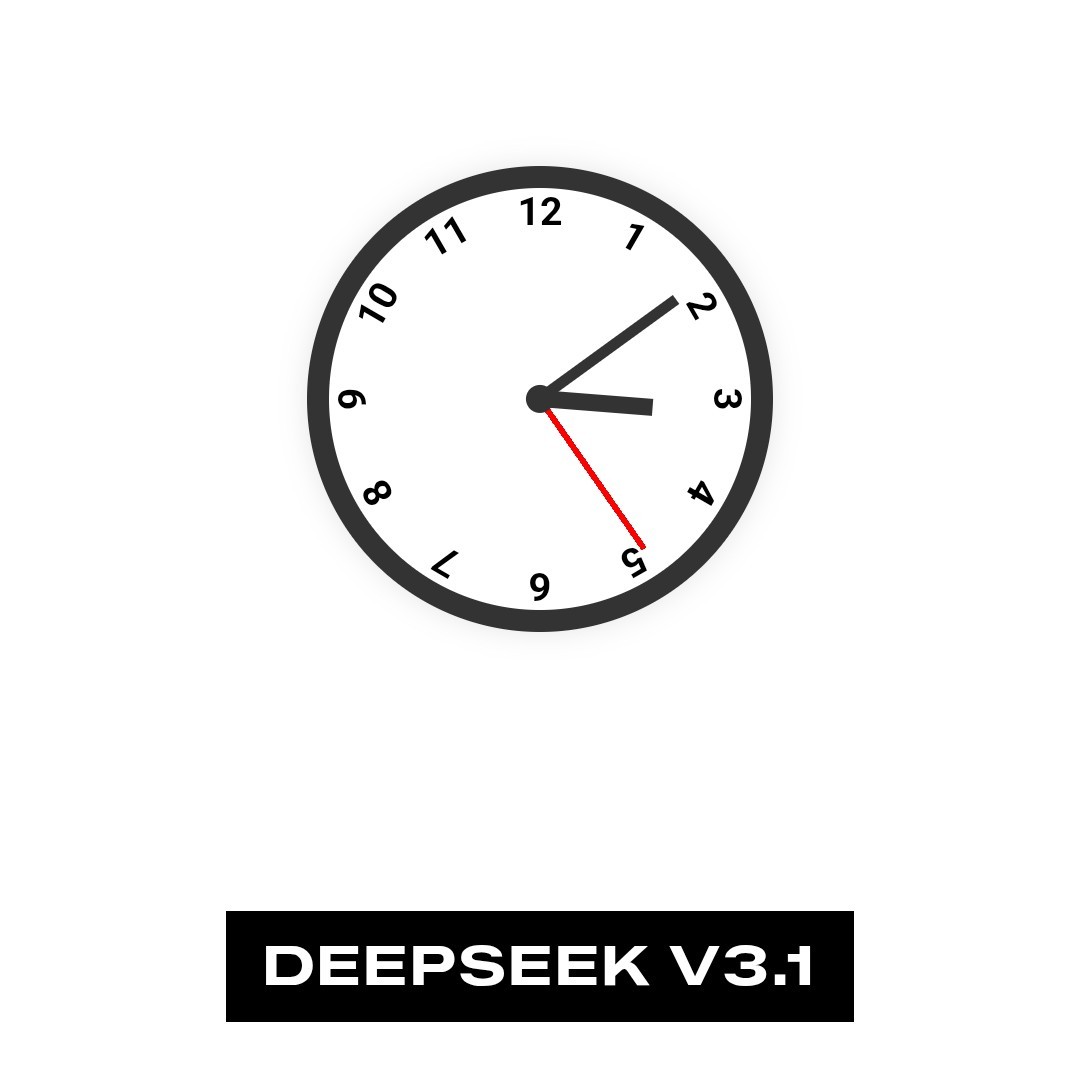

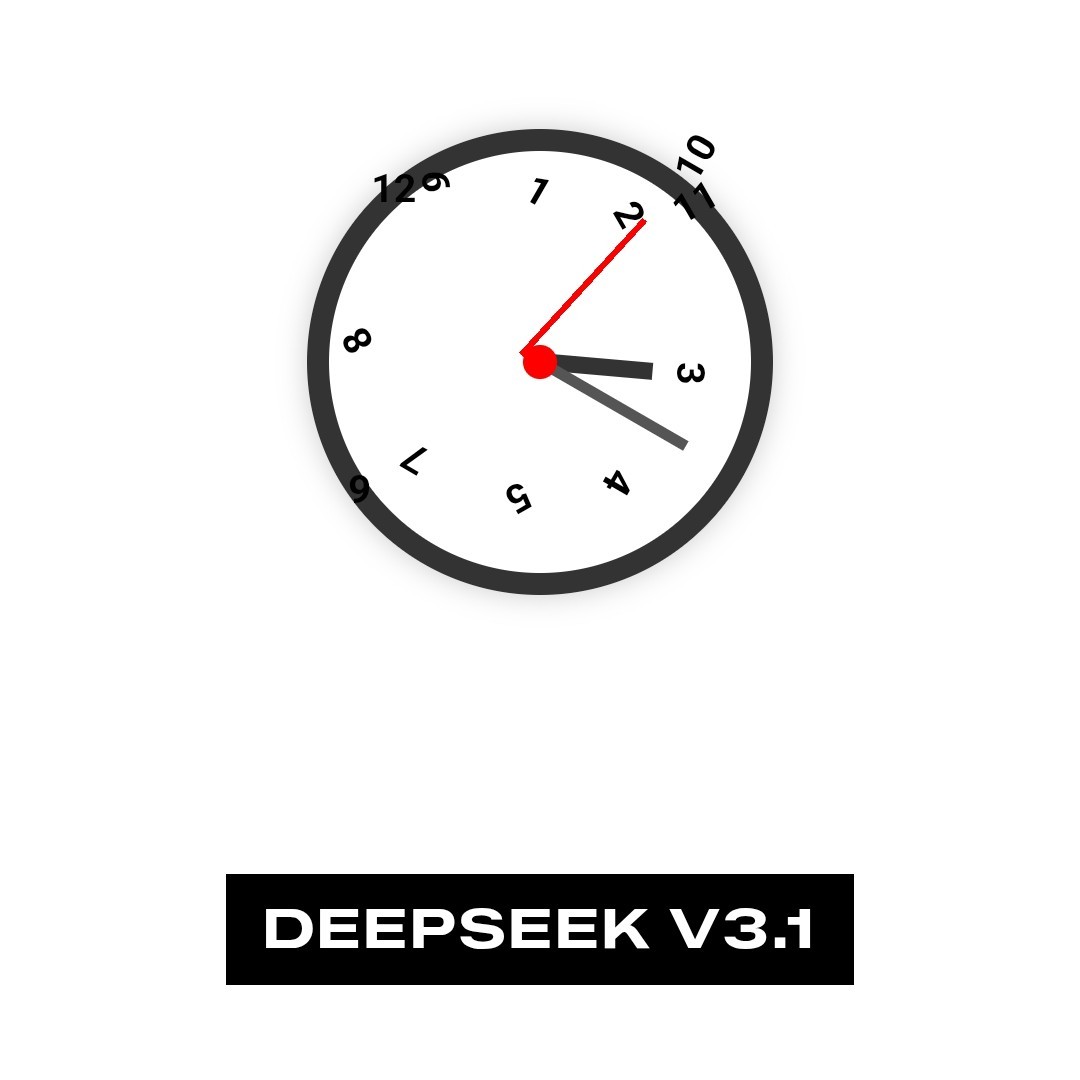

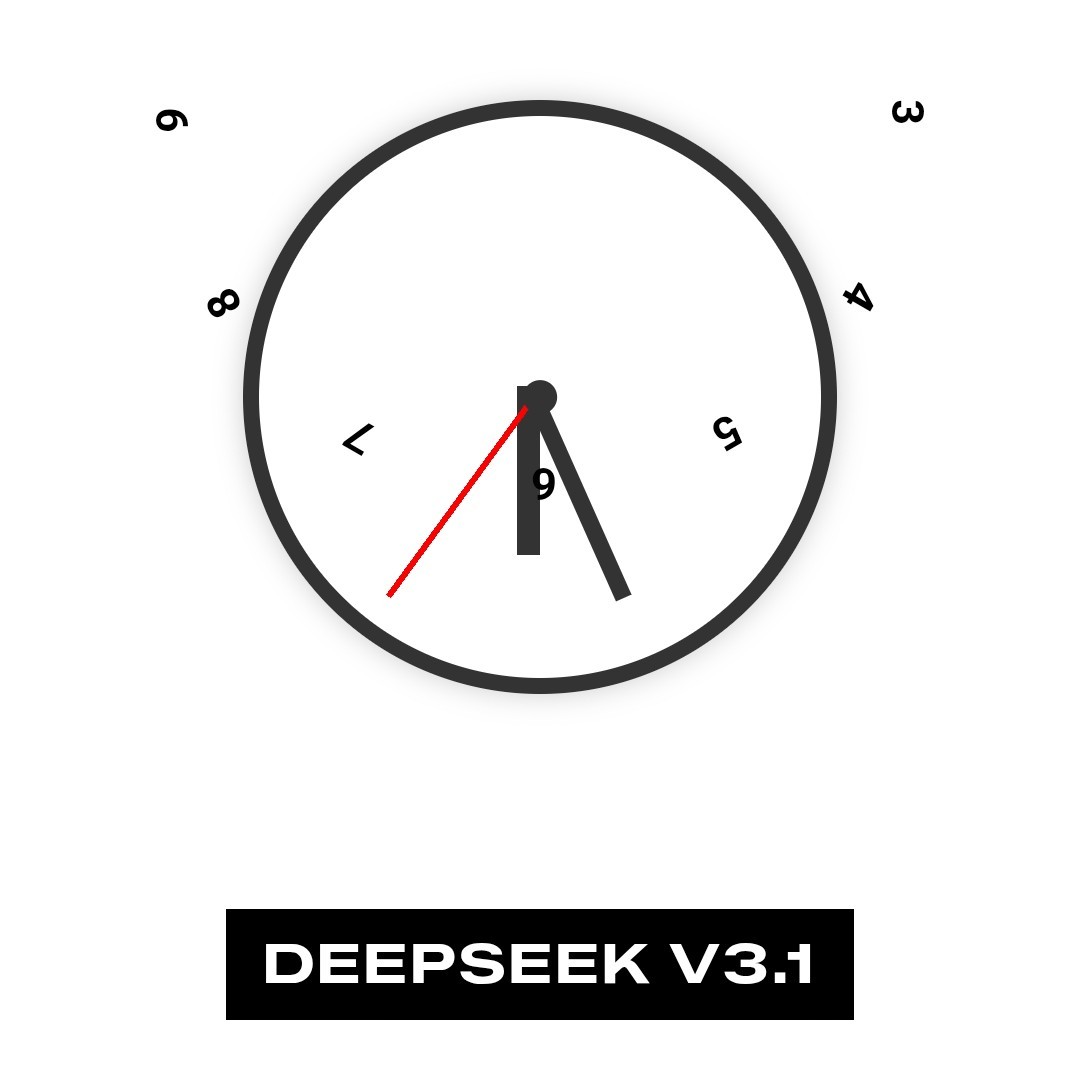

Deepseek has a recognisable clock now and then, too. They both mix up the current time, though.

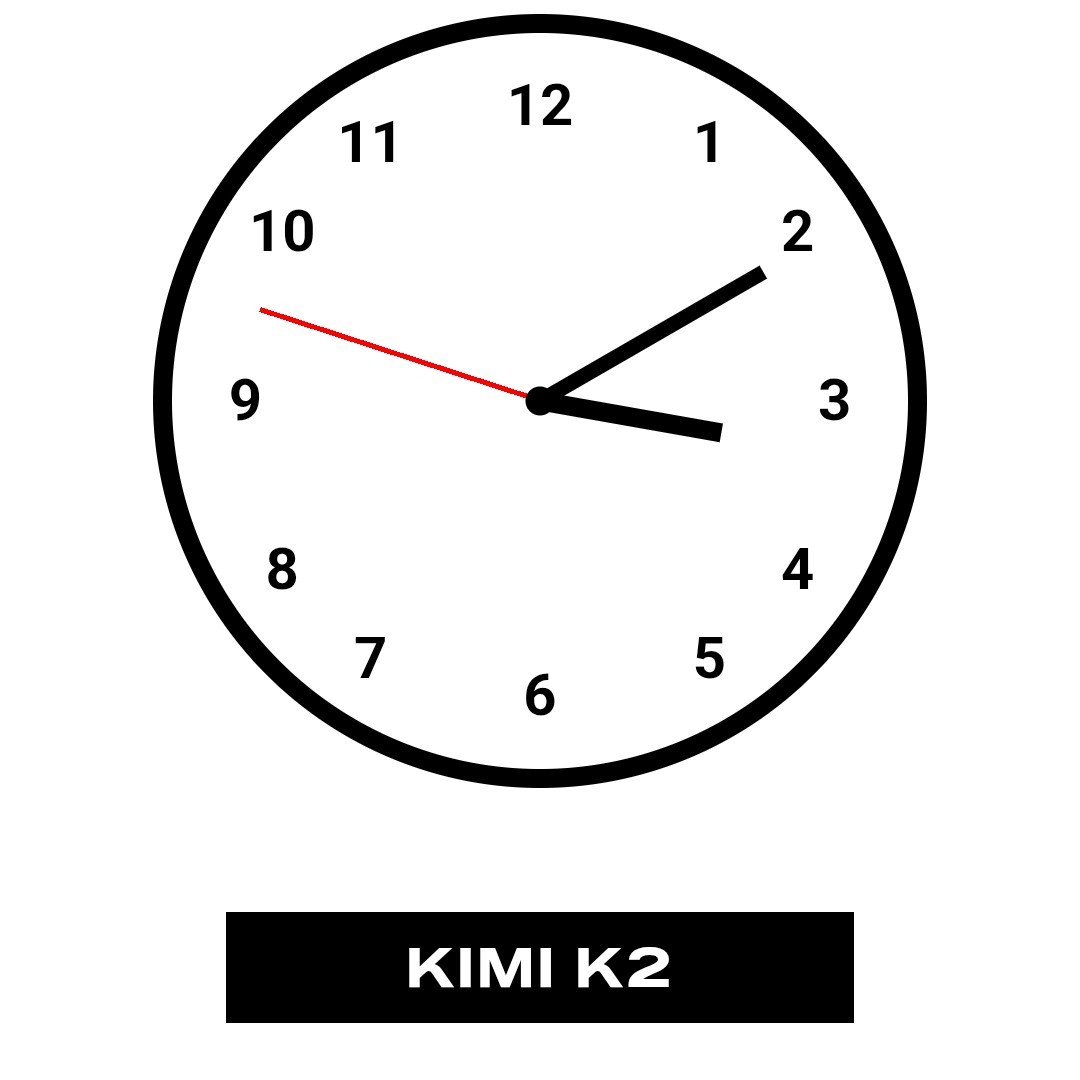

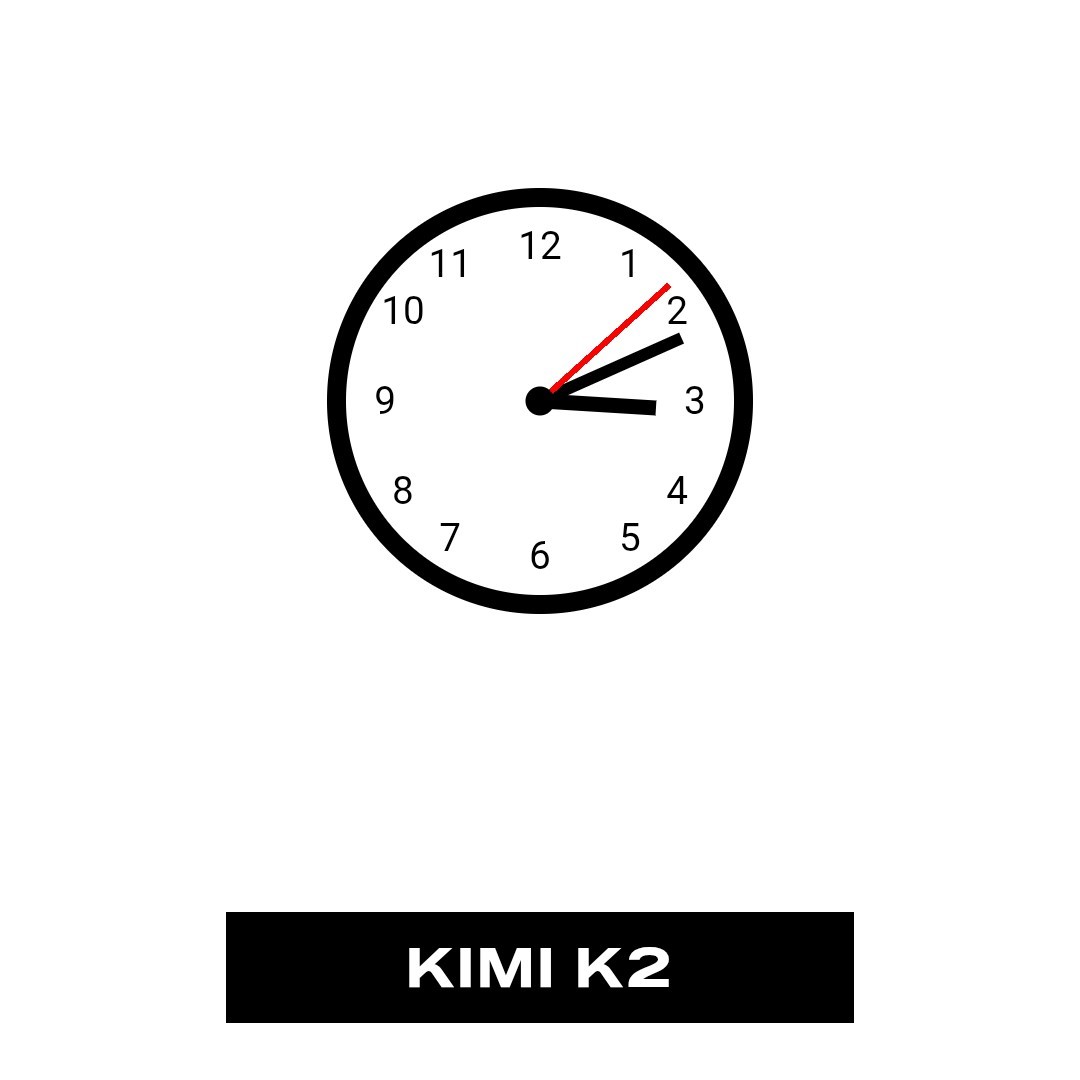

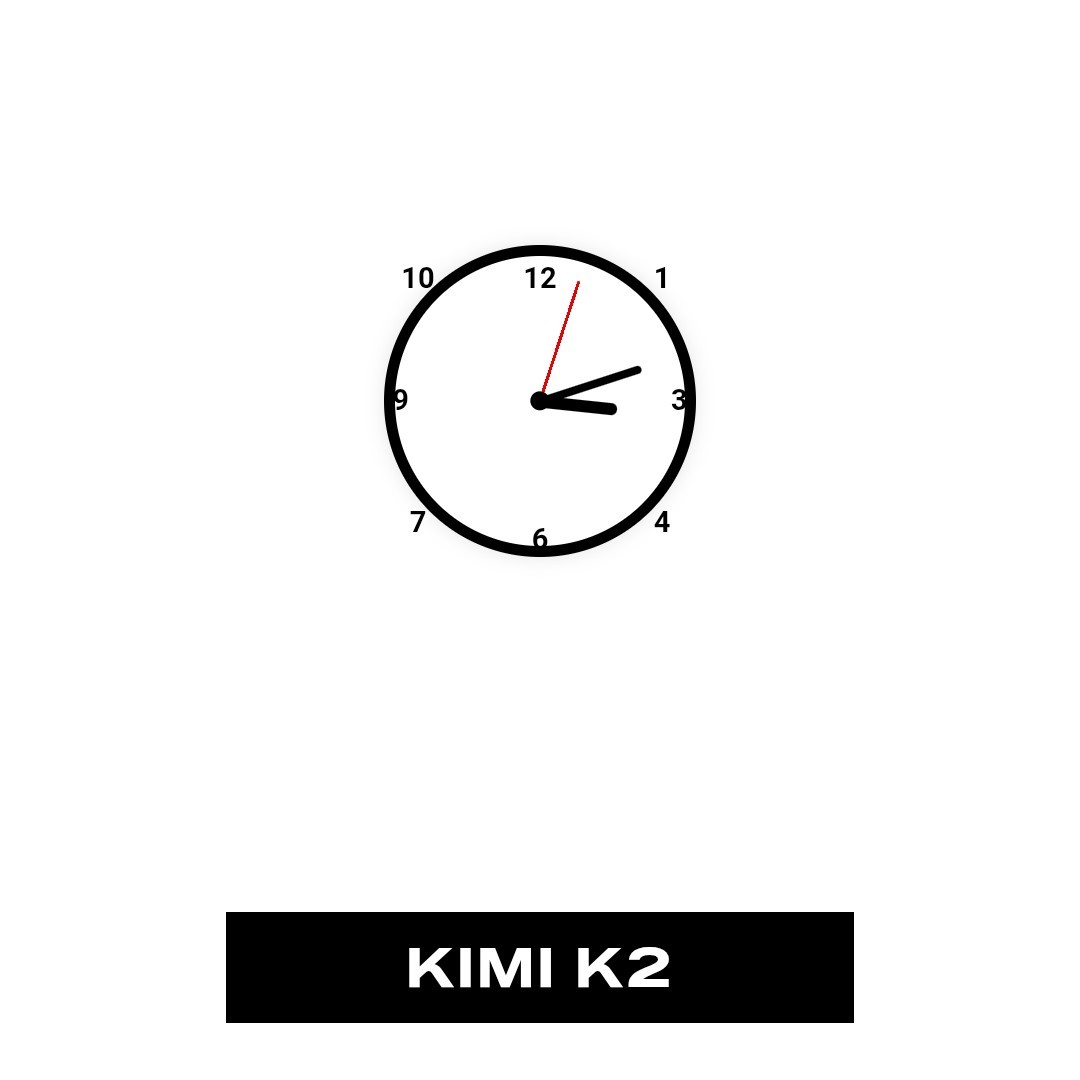

By far the best, but still off. These three were loaded in the same order as i post them:

I dig the square clock, and am now sad that the numbers can’t be put into the corners on a real clock. Unless they’re shifted from the usual position.

Cartier found a work around quite some time ago and maybe they weren’t even the first to design a square ‘clock’:

(The roman numerals are nice, but notice the ‘circle’ between the numerals and the hands, almost like the circle from the ai)

That one is pretty good, though the Roman numerals are rather busy and uneven.

This one is closer, though now I have to wonder if all non-square rectangular clocks have an old-timey whiff for me, or it’s just the border here:

This is also impressive:

I like the second one a lot, especially how the upper and bottom numerals face the floor and the left and right ones face towards the center, and to allow for that there has to be a sudden flip from 3>4 and 8>9. But the indices are not playing by the square-clock rule and unlike the cartier one form a regular oval shape.

I like how the upper one had to find a way to make clear which indice represents the numerals - it really shows the problem in projecting the circular movement of the hands into a rectangular (thanks, that’s the right word) shape.

It think most analog clocks/watches will give you an old-timey whiff much more often than not, just because there is a more new-timey alternative. I went looking for some watch faces for smart watches, but couldn’t really find any interesting one. Most are either digital numbers or a round clock on a rectangular display.

Neither of those interest me like the Cartier tank, which I find really ugly watches to be honest. It’s just this double outlined rectangle(-ish shape) which is unevenly split into 60 boxes that I like (seen below on the first, third and fifth watch).

Haha, I found myself thinking the same thing, and then caught myself, realizing all the other LLMs on this page had lowered the bar immensely for what I’m considering impressive.

I thought the same and then Kimi K2 came up with a clock that has two 12 and no 11…

Removed by mod

that’s scary how dementia works :'(

This kinda freaked me out: AI models fed their own outputs as training data will quickly start making distorted images that look spookily like human painting made under the progression of mental illness or drugs.

well that was terrifying

Thanks for sharing this! I really think that when people see LLM failures and say that such failures demonstrate how fundamentally different LLMs are from human cognition, they tend to overlook how humans actually do exhibit remarkably similar failures modes. Obviously dementia isn’t really analogous to generating text while lacking the ability to “see” a rendering based on that text. But it’s still pretty interesting that whatever feedback loops did get corrupted in these patients led to such a variety of failure modes.

As an example of what I’m talking about, I appreciated and generally agreed with this recent Octomind post, but I disagree with the list of problems that “wouldn’t trip up a human dev”; these are all things I’ve seen real humans do, or could imagine a human doing.

such a variety of failure modes

What i find interesting is that in both cases there is a certain consistency in the mistakes too - basically every dementia patient still understands the clock is something with a circle and numbers and not a square with letters for example. LLMs can tell you cokplete bullshit, but still understands it has to be done with perfect grammar in a consistant language. So much so it struggles to respond outside of this box - ask it to insert spelling errors to look human for example.

the ability to “see”

This might be the true problem in both cases, both the patient and the model can not comprehend the bigger picture (a circle is divided into 12 segments, because that is how we deconstructed the time it takes for the earth to spin around it’s axis). Things that seem logical to use, are logical because of these kind of connections with other things we know and comprehend.

… what

Basically this: https://www.psychdb.com/cognitive-testing/clock-drawing-test

Thanks.

What I still didn’t figure out about the comment I replied to is:

- What is each row? They’re labeled I, II, III, IV. What’s being counted?

- Why did they link to a home interior design website under “via”?

Good questions. I don’t know, and I can no longer try to find out, as the mods have now removed the comment. (Sorry for the double-post–I got briefly confused about which comment you were referring to and deleted my first post, then realized I’d been frazzled and the post in question really was deleted by the mods.)

deleted by creator

I guess that test is going to become less useful as generation Z age

Get educated

Thanks for sharing that mindfuck. I honestly would’ve thought something was wrong with my cognition if you hadn’t mentioned it was a test beforehand.

It’s funny how GPT-5 is consistently the worst one, and it’s not even close.

qwen 2.5 is absolutely pants on head ridiculous compared to gpt5 when I’m looking at it right now.

Some of these are absolutely hilarious

Given that the AI models are basically constructing these “blindly”- using the language model to string together html and javascript without really being able to check how it looks- some of these are actually pretty impressive. But also making the AI do things it’s bad at is funny. Reminds me of all the AI ASCII art fails…

deleted by creator

qwen is trying her best 😭😔

So far, I’d give qwen the prize for most artistic impression of a clock.

Kimi K2 appears to consistently get it right.

And just as I typed that, Kimi made one where 9 and 10, and 11 and 12 overlapped.

What is this obsession with clocks recently?

I don’t know if it’s actually related, but I’ve read that asking people to draw a clock face is a simple way to identify some brain problems

Quick screening for dementia, according to this

Edit: I guess this means most of the AI has ‘Conceptual Deficits’, pretty accurate lol

Would be funny if AI models are generating such wildly useless “clocks” because they ingested too many dementia screening tests in their training data

There is someone training the biggest, bestest model to draw clock faces to pass that test as we speak.

I’m guessing it’s an easy metric to compare benchmarks. “Write a clock”.

I don’t even

I was surprised that both Grok and Gemini 2.5 got it right once, only to fuck it up on the refresh

Not really world clocks, they just try to use JavaScript to display the device’s time.

No JavaScript I think, it’s just html and CSS. The initial time is provided in the prompt every minute according to the description. I wonder if they’d be any better if they could use js. Probably not.

“Time is a relative of mine” / Eisenstein

Deepseek seems to have the only functional clock.

Depends on when you load it. They refresh every minute. In the first one I got, Deepseek’s was almost functional, but Haiku had one that was surprisingly good.

I’ve never seen any of the OpenAI models come up with anything that was more than completely broken.

I thought the same, until it refreshed and it got worse and it refreshed and it was different but still bad. These three were loaded in the order as posted:

You know, I don’t, and what the fuck?

Well, KIMI K2 seems to have created the working one. Others failed. I suppose that this model was optimized for this while others not.

The clocks change every minute. I’ve seen some from deepseek and qwen that looked ok. But kimi seems to be the most consistent

Really cool idea, but the site seems a bit biased for the chinese models, or is otherwise set up weird. I’m not able to reproduce how consistently bad the others are in web dev arena, which generally accepted as the gold standard for testing AI web dev ability.

Each model is allowed 2000 tokens to generate its clock. Here is its prompt: Create HTML/CSS of an analog clock showing ${time}. Include numbers (or numerals) if you wish, and have a CSS animated second hand. Make it responsive and use a white background. Return ONLY the HTML/CSS code with no markdown formatting.

are you using the same prompt?

There’s a couple differences. It’s giving it the current time as part of the prompt, which is interesting. The other difference is that it’s asking it to make it responsive. But even when I use that exact prompt (inserting the time obv), it works fine on claude, openai, and gemini.

So there’s definitely an issue specific to this page somewhere. Maybe it’s not iframing them? I’m on mobile so I can’t check.