“No Duh,” say senior developers everywhere.

The article explains that vibe code often is close, but not quite, functional, requiring developers to go in and find where the problems are - resulting in a net slowdown of development rather than productivity gains.

Even though this shit was apparent from day fucking 1, at least the Tech Billionaires were able to cause mass layoffs, destroy an entire generation of new programmers’ careers, introduce an endless amount of tech debt and security vulnerabilities, all while grifting investors/businesses and making billions off of all of it.

Sad excuses for sacks of shit, all of them.

Look on the bright side, in a couple of years they will come crawling back to us, desperate for new things to be built so their profit machines keep profiting.

Current ML techniques literally cannot replace developers for anything but the most rudimentary of tasks.

I wish we had true apprenticeships out there for development and other tech roles.

I mean… At best it’s a stack overflow/google replacement.

There’s some real perks to using AI to code - it helps a ton with templatable or repetitive code, and setting up tedious tasks. I hate doing that stuff by hand so being able to pass it off to copilot is great. But we already had tools that gave us 90% of the functionality copilot adds there, so it’s not super novel, and I’ve never had it handle anything properly complicated at all successfully (asking GPT-5 to do your dynamic SQL calls is inviting disaster, for example. Requires hours of reworking just to get close.)

But we already had tools that gave us 90%

More reliable ones.

Deterministic ones

Fair, I’ve used it recently to translate a translations.ts file to Spanish.

But for repetitive code, I feel like it is kind of a slow down sometimes. I should have refactored instead.

Some code is boilerplate and can’t be distilled down more. It’s nice to point an AI to a database schema and say “write the Django models, admin, forms, and api for this schema, using these authentication permissions”. Yeah I’ll have to verify it’s done right, but that gets a lot of the boring typing out of the way.

I use it for writing code to call APIs and is a huge boon.

Yeah, you have to check the results, but it’s way faster than me.

That’s fair.

This is a thing people miss. “Oh it can generate repetitive code.”

OK, now who’s going to maintain those thousands of lines of repetitive unit tests, let alone check them for correctness? Certainly not the developer who was too lazy to write their own tests and to think about how to refactor or abstract things to avoid the repetition.

If someone’s response to a repetitive task is copy-pasting poorly-written code over and over we call them a bad engineer. If they use an AI to do the copy-paste for them that’s supposed to be better somehow?

Similarly I find it very useful for if I’ve written a tool script and really don’t want to write the command line interface for it.

“Here’s a well-documented function - write an argparser for it”

…then I fix its rubbish assumptions and mistakes. It’s probably not drastically quicker but it doesn’t require as much effort from me, meaning I can go harder on the actual function (rather than keeping some effort in reserve to get over the final hump).

I’ve had plenty of success using it to build things like docker compose yamls and the like, but for anything functional, it does often take a few tries to get it right. I never use its raw for anything in production. Only as a leaping off point to structure things.

For the missing 10% : the folder with copies of the code you have already wrote doing that.

(asking GPT-5 to do your dynamic SQL calls is inviting disaster, for example. Requires hours of reworking just to get close.)

Maybe it’s the dynamic SQL calls themselves that are inviting disaster?

Dynamic SQL in of itself not an issue, but the consequences (exacerbated by SQL’s inherent irrecoverability from mistakes - hope you have backups) have stigmatized its use heavily. With an understanding of good practice, a proper development environment and a close eye on the junior devs, there’s no inherent issue to using it.

With an understanding of good practice, a proper development environment and a close eye on the junior devs, there’s no inherent issue to using it.

My feelings about C/C++ are the same. I’m still switching to Rust, because that’s what the company wants.

So much of the AI hype has been pointing to ten year old technology repackaged in a slick new interface.

AI is the iPod to the Zune of yesteryear.

Repackaging old technology in slick new interfaces is what we have been calling progress in computer software for 40+ years.

I mean… I like to think we’ve done a bit more than that. FFS, file compression alone has made leaps and bounds since the 3.25" floppy days.

Also, as a T-SQL guy, I gotta say there’s a world of difference between SQL 2008 and SQL 2022.

But I’ll spot you that a lot of the last 10-15 years has produced herculean efforts in answering the question “How can we squeeze a few more ads into your GUI?”

There have been a few “milestone moments” like map-reduce Hadoop, etc. Still, there’s a whole lot of eye candy wrapped around the same old basic concepts.

I found that it only does well if the task is already well covered by the usual sources. Ask for anything novel and it shits the bed.

That’s because it doesn’t understand anything and is just vomiting forth output based on the code that was fed into it.

At absolute best.

My experience is it’s the bottom stack overflow answers. Making up bullshit and nonexistent commands, etc.

They should make it more like SO and have it chastise you for asking a stupid question you should already know the answer to lol

If you know what you want, its automatic code completion can save you some typing in those cases where it gets it right (for repetitive or trivial code that doesn’t require much thought). It’s useful if you use it sparingly and can see through its bullshit.

For junior coders, though, it could be absolute poison.

At least when I’m cleaning up after shit devs who used Stack Overflow, I can usually search using a fragment of their code and find where they swiped it from and get some clue what the hell they were thinking. Now that they’re all using AI chatbots, there’s no trace.

In the beginning there were manufacturer’s manuals, spec sheets, etc.

Then there were magazines, like Byte, InfoWorld, Compute! that showed you a bit more than just the specs

Then there were books, including the X for Dummies series that purported to teach you theory and practice

Then there was Google / Stack Overflow and friends

Somewhere along there, where depends a lot on your age, there were school / University courses

Now we have “AI mode”

Each step along that road has offered a significant speedup, connecting ideas to theory to practice.

I agree, all the “magic bullet” AI hype is far overblown. However, with AI something I new I can do is, interactively, develop a specification and a program. Throw out the code several times while the spec gets refined, re-implemented, tried in different languages with different libraries. It’s still only good for “small” projects, but less than a year ago “small” meant less than 1000 lines of code. These days I’m seeing 300 lines of specification turn into 1500-3000 lines of code and have it running successfully within half a day.

I don’t know if we’re going to face a Kurzweilian singularity where these things start improving themselves at exponential rates, or if we’ll hit another 30 year plateau like neural nets did back in the 1990s… As things are, Claude helps me make small projects several times faster than I could ever do with Google and Stack Overflow. And you can build significant systems out of cooperating small projects.

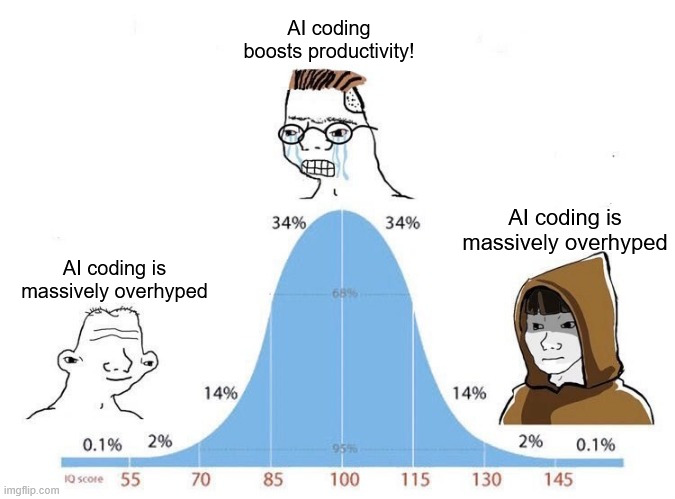

“No Duh,” say senior developers everywhere.

I’m so glad this was your first line in the post

No duh, says a layman who never wrote code in his life.

Oddly enough, my grasp of coding is probably the same as the guy in the middle but I still know that LLM generated code is garbage.

Yeah, I actually considered putting the same text on all 3, but we gotta put the idiots that think it’s great somewhere! Maybe I should have put it with the dumbest guy instead.

Think this one needs a bimodal curve with the two peaks representing the “caught up in the hype” average coder and the realistic average coder.

Agreed, that’s why it didn’t feel quite right when I made it.

Yeah, there’s definitely morons out there who never bothered to even read about the theory of good code design.

I guess I’m one of the idiots then, but what do I know. I’ve only been coding since the 90s

All the best garbage to learn from, to debug, debug, debug, sharpening those skills.

That’s kinda wrong though. I’ve seen llm’s write pretty good code, in some cases even doing something clever I hadn’t thought of.

You should treat it as any junior though, and read the code changes and give feedback if needed.

Thing is both statements can be true.

Used appropriately and in the right context, LLMs can accelerate some select work.

But the hype level is ‘human replacement is here (or imminent, depending on if the company thinks the audience is willing to believe yet or not)’. Recently Anthropic suggested someone could just type ‘make a slack clone’ and it’ll all be done and perfect.

This. Like with any tool you have to learn how and when to use it. I’ve started to get the hang of what tasks it improves but I don’t think I’ve regained the hours I’ve spent learning it yet.

But as the tool and my understanding of it improves it’ll probably happen some day.

Heh. That’s a fun chart. If that’s programming aptitude, I scored 80 on that part of the broad spectrum aptitude test I got a sneak-peek chance to do several parts of. Well now I know why I’m so easily in agreement with “senior coders”, if it is programming aptitude quotient. If it’s just iq, … pulls hood up to block the glare.

Daunting that there may be a middling bias getting apparent advantages. Evolution may not serve us well like that.

And many between “seniour developers everywhere” and “a layman who never wrote code in his life”.

Like me, I’m saying it too. A big ol “No duh”.

Disbelieve the hype.

If not to editorialize, what else is the text box for? :)

The most immediately understandable example I heard of this was from a senior developer who pointed out that LLM generated code will build a different code block every time it has to do the same thing. So if that function fails, you have to look at multiple incarnations of the same function, rather than saying “oh, let’s fix that function in the library we built.”

Yeah, code bloat with LLMs is fucking monstrous. If you use them, get used to immediately scouring your code for duplications.

Yeah if I use it and it generatse more than 5 lines of code, now I just immediately cancel it out because I know it’s not worth even reading. So bad at repeating itself and falling to reasonably break things down in logical pieces…

With that I only have to read some of it’s suggestions, still throw out probably 80% entirely, and fix up another 15%, and actually use 5% without modification.

There are tricks to getting better output from it, especially if you’re using Copilot in VS Code and your employer is paying for access to models, but it’s still asking for trouble if you’re not extremely careful, extremely detailed, and extremely precise with your prompts.

And even then it absolutely will fuck up. If it actually succeeds at building something that technically works, you’ll spend considerable time afterwards going through its output and removing unnecessary crap it added, fixing duplications, securing insecure garbage, removing mocks (God… So many fucking mocks), and so on.

I think about what my employer is spending on it a lot. It can’t possibly be worth it.

You mean relying blindly on a statistical prediction engine to attempt to produce sophisticated software without any understanding of the underlying principles or concepts doesn’t magically replace years of actual study and real-world experience?

But trust me, bro, the singularity is imminent, LLMs are the future of human evolution, true AGI is nigh!

I can’t wait for this idiotic “AI” bubble to burst.

AI coding is the stupidest thing I’ve seen since someone decided it was a good idea to measure the code by the amount of lines written.

More code is better, obviously! Why else would a website to see a restaurant menu be 80Mb? It’s all that good, excellent code.

It did solve my impostor syndrome though. Turns out a bunch of people I saw to be my betters were faking it all along.

Imagine if we did “vibe city infrastructure”. Just throw up a fucking suspension bridge and we’ll hire some temps to come in later to find the bad welds and missing cables.

Almost like its a desperate bid to blow another stock/asset bubble to keep ‘the economy’ going, from C suite, who all knew the housing bubble was going to pop when this all started, and now is.

Funniest thing in the world to me is high and mid level execs and managers who believe their own internal and external marketing.

The smarter people in the room realize their propoganda is in fact propogands, and are rolling their eyes internally that their henchmen are so stupid as to be true believers.

Might be there someday, but right now it’s basically a substitute for me googling some shit.

If I let it go ham, and code everything, it mutates into insanity in a very short period of time.

I’m honestly doubting it will get there someday, at least with the current use of LLMs. There just isn’t true comprehension in them, no space for consideration in any novel dimension. If it takes incredible resources for companies to achieve sometimes-kinda-not-dogshit, I think we might need a new paradigm.

A crazy number of devs weren’t even using EXISTING code assistant tooling.

Enterprise grade IDEs already had tons of tooling to generate classes and perform refactoring in a sane and algorithmic way. In a way that was deterministic.

So many use cases people have tried to sell me on (boilerplate handling) and im like “you have that now and don’t even use it!”.

I think there is probably a way to use llms to try and extract intention and then call real dependable tools to actually perform the actions. This cult of purity where the llm must actually be generating the tokens themselves… why?

I’m all for coding tools. I love them. They have to actually work though. Paradigm is completely wrong right now. I don’t need it to “appear” good, i need it to BE good.

Exactly. We’re already bootstrapping, re-tooling, and improving the entire process of development to the best of our collective ability. Constantly. All through good, old fashioned, classical system design.

Like you said, a lot of people don’t even put that to use, and they remain very effective. Yet a tiny speck of AI tech and its marketing is convincing people we’re about to either become gods or be usurped.

It’s like we took decades of technical knowledge and abstraction from our Computing Canon and said “What if we didn’t use that anymore?”

This is the smoking gun. If the AI hype boys really were getting that “10x engineer” out of AI agents, then regular developers would not be able to even come close to competing. Where are these 10x engineers? What have they made? They should be able to spin up whole new companies, with whole new major software products. Where are they?

They are statistical prediction machines. The more they output, the larger the portion of their “context window” (statistical prior) becomes the very output they generated. It’s a fundamental property of the current LLM design that the snake will eventually eat enough of it’s tail to puke garbage code.

I think we’ve tapped most of the mileage we can get from the current science, the AI bros conveniently forget there have been multiple AI winters, I suspect we’ll see at least one more before “AGI” (if we ever get there).

Glad someone paid a bunch of worthless McKinsey consultants what I could’ve told you myself

It is not worthless. My understanding is that management only trusts sources that are expensive.

Yep, going through that at work, they hired several consultant companies and near as I can tell, they just asked employees how the company was screwing up, we largely said the same things we always say to executives, they repeated them verbatim, and executives are now praising the insight on how to fix our business…

it’s slowing you down. The solution to that is to use it in even more places!

Wtf was up with that conclusion?

I don’t think it’s meant to be a conclusion. The article serves as a recap of several reports and studies about the effectivity of LLMs with coding, and the final quote from Bain & Company was a counterpoint to the previous ones asserting that productivity gains are minimal at best, but also that measuring productivity is a grey area.

It remains to be seen whether the advent of “agentic AIs,” designed to autonomously execute a series of tasks, will change the situation.

“Agentic AI is already reshaping the enterprise, and only those that move decisively — redesigning their architecture, teams, and ways of working — will unlock its full value,” the report reads.

“Devs are slower with and don’t trust LLM based tools. Surely, letting these tools off the leash will somehow manifest their value instead of exacerbating their problems.”

Absolute madness.

How are you interpreting it that way. Did you miss a sentence or something in the quote?

It’s not interpretation, it’s extrapolation.

There’s quotes.

I code with LLMs every day as a senior developer but agents are mostly a big lie. LLMs are great for information index and rubber duck chats which already is incredible feaute of the century but agents are fundamentally bad. Even for Python they are intern-level bad. I was just trying the new Claude and instead of using Python’s pathlib.Path it reinvented its own file system path utils and pathlib is not even some new Python feature - it has been de facto way to manage paths for at least 3 years now.

That being said when prompted in great detail with exact instructions agents can be useful but thats not what being sold here.

After so many iterations it seems like agents need a fundamental breakthrough in AI tech is still needed as diminishing returns is going hard now.

Oh yes. The Great

pathlib. The Blessedpathlib. Hallowed be it and all it does.I’m a Ruby girl. A couple of years ago I was super worried about my decision to finally start learning Python seriously. But once I ran into

pathlib, I knew for sure that everything will be fine. Take an everyday headache problem. Solve it forever. Boom. This is how standard libraries should be designed.I disagree. Take a routine problem and invent a new language for it. Then split it into various incompatible dialects, and make sure in all cases it requires computing power that no one really has.

Pathlib is very nice indeed, but I can understand why a lot of languages don’t do similar things. There are major challenges implementing something like that. Cross-platform functionality is a big one, for example. File permissions between Unix systems and Windows do not map perfectly from one system to another which can be a maintenance burden.

But I do agree. As a user, it feels great to have. And yes, also in general, the things Python does with its standard library are definitely the way things should be done, from a user’s point of view at least.

If it wasn’t for all the AI hype that it’s going to do everyone’s job, LLMs would be widely considered an amazing advancement in computer-human interaction and human assistance. They are so much better than using a search engine to parse web forums and stack overflow, but that’s not going to pay for investing hundreds of billions into building them out. My experience is like yours - I use AI chat as a huge information index mainly, and helpful sounding board occasionally, but it isn’t much good beyond that.

They are so much better than using a search engine to parse web forums and stack overflow,

The hallucinations (more accurately bullshitting) and the fact they have to get new training data but are discouraging people from engaging in the structures that do so make this highly debatable

I agree that it is certainly debatable. However, my experience has been that information extracted about, say what may cause a strange error message from some R output, has been at least as reliable as random stack overflow posts - however, I get that answer instantly rather than after significant effort with a search engine. It can often find actual links better than a search engine for esoteric problems as well. This, however is merely a relative improvement, and not some world-changing event like AI boosters will claim, and it’s one of the only use-cases where AI provides a clear advantage. Generating broken code isn’t useful to me.

I will concur with the whole ‘llm keeps suggesting to reinvent the wheel’

And poorly. Not only did it not use a pretty basic standard library to do something, it’s implementation is generally crap. For example it offered up a solution that was hard coded to IPv4, and the context was very ipv6 heavy

I have a theory that it’s partly because a bunch of older StackOverflow answers have more votes than newer ones using new features. More referring to not using relatively new features as much as it should.

I’d wager that the votes are irrelevant. Stock overflow is generously <50% good code and is mostly people saying ‘this code doesn’t work – why?’ and that is the corpus these models were trained on.

I’ve yet to see something like a vibe coding livestream where something got done. I can only find a lot of ‘tutorials’ that tell how to set up tools. Anyone want to provide one?

I could… possibly… imagine a place where someone took quality code from a variety of sources and generate a model that was specific to a single language, and that model was able to generate good code, but I don’t think we have that.

Vibe coders: Even if your code works and seems to be a success, do you know why it works, how it works? Does it handle edge cases you didn’t include in your prompt? Does it expose the database to someone smarter than the LLM? Does it grant an attacker access to the computer it’s running on, if they are smarter than the LLM? Have you asked your LLM how many 'r’s are in strawberry?

At the very least, we will have a cyber-security crisis due to vibe coding; especially since there seems to be a high likelihood of HR and Finance vibe coders who think they can do the traditional IT/Dev work without understanding what they are doing and how to do it safely.

I have been vibe coding a whole game in JavaScript to try it out. So far I have gotten a pretty ok game out of it. It’s just a simple match three bubble pop type of thing so nothing crazy but I made a design and I am trying to implement it using mostly vibe coding.

That being said the code is awful. So many bad choices and spaghetti code. It also took longer than if I had written it myself.

So now I have a game that’s kind of hard to modify haha. I may try to setup some unit tests and have it refactor using those.

Sounds like vibecoders will have to relearn the lessons of the past 40 years of software engineering.

As with every profession every generation… only this time on their own because every company forgot what employee training is and expects everyone to be born with 5 years of experience.

Wait, are you blaming AI for this, or yourself?

Blaming? I mean it wrote pretty much all of the code. I definitely wouldn’t tell people I wrote it that way haha.

I miss the days when machine learning was fun. Poking together useless RNN models with a small dataset to make a digital Trump that talked about banging his daughter, end endless nipples flowing into America. Exploring the latent space between concepts.